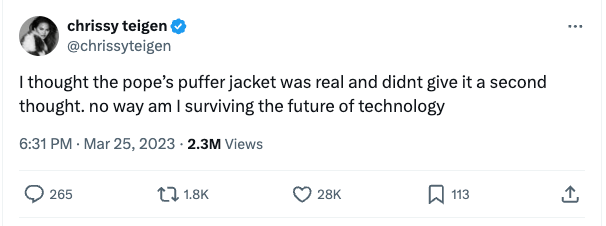

The Pope didn’t wear Balenciaga, but Chrissy Teigen thought he did. The AI-generated image was so believable that she didn’t give the Pope’s white puffer jacket “a second thought.”

At this point in time, we’ve been using AI to make funny pictures, but the future is a little more painful. Your estranged spouse might create a fake recording of you to take your kids away, and anyone can make the President say whatever they want.

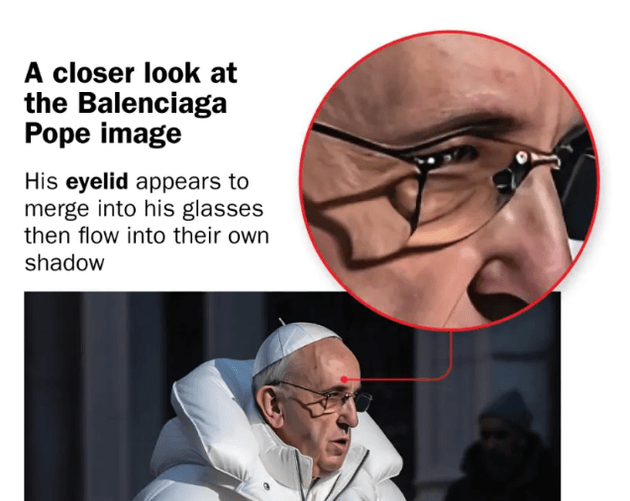

The internet is full of tips on how to spot AI-generated photos, but this approach isn’t foolproof. While the Pope’s glasses may merge with his eyelid in AI-generated photos today, they won’t for long. Artificial intelligence will continue to get better, and unfortunately, our intelligence will stay the same. Generating AI images and detecting them (even with software) is an on-going arms race with no end in sight.

Surviving the future of technology requires a different approach. Surprisingly, surviving the future involves using the methods of those who record the past: archivists. Archivists like the USC Shoah Foundation are well known for their efforts in recording the stories that need to be told, so that the past is not forgotten. It’s this same archival process that can save us, if we use the right technology.

Here’s the different approach: instead of taking a photo and trying to find flaws with it, what if we were able to ask the Internet where the image came from and trust the answers? If we were able to ask who exactly is claiming the photo is true? And when was the photo taken? By whom? And was it edited?

What if we were able to choose which news organizations and researchers we want to trust, and ask them specifically about the photo? This different approach shifts the burden of proof to better fit a world where AI is cheap and investigations are expensive. When there’s a constant firehose of AI generated content, we can’t assume photos are real until they’re proven fake. Instead, we can create platforms, spaces, and resources that people can turn to, to find content that is verifiable, with attestations from those we trust.

Knowing which images we should trust is no small task. But there are elements of blockchain technology (cryptographic hashes, timestamping services, and digital signatures) that can help. Note I didn’t say blockchains themselves. It’s the cryptographic tools – the things that blockchains are built with – those are the things I want to use.

Cryptographic hashes, timestamping services, and digital signatures are nothing new. Timestamping services have been around since 1991 and are a part of the original Bitcoin whitepaper. Digital signatures were introduced back in the 1970s. It seems cryptographers have a peculiar way of talking: what they say is only heard by the rest of the world decades later, if at all. So if you haven’t heard about any of these things before, that’s entirely okay. Hardly anyone else has either, even though the cryptography itself is used extensively in tech.

Surprisingly, I’ve found the easiest way to explain all of this is with a truck seal. It looks like this:

In the trucking industry, a truck seal is a plastic loop used to seal the cargo of a semi-truck. It has a serial number on it that is recorded by the shipper. At receiving, the receiver cuts the truck seal off and compares the serial number with the number that was transmitted separately by the shipper. If they match, we know the truck wasn’t tampered with.

Because a truck seal could have been cut off and replaced in transit by a different seal, it’s important to compare the serial numbers. It’s also important that the serial number is transmitted separately. We don’t want the recorded serial number to be easy to change if the truck seal is cut and a new seal is applied in transit. That would defeat the purpose of the check.

We can use this same concept to secure images and other forms of data instead of trucks. And instead of plastic seals, we can use a cryptographic hash.

A cryptographic hash of an image is like a truck seal, except the hash isn’t a serial number. It’s made of numbers, but it’s a unique id that is a short encoded summary, or digest, of everything that is in the image. If anything at all in the image changes, a pixel is added or subtracted, then a different hash is produced. Compared to an assigned serial number, this property has the added benefit that if you have the image data, you also can derive its cryptographic hash.

Like a truck seal, recording the cryptographic hash of a file allows us to ensure that the contents of the file have not changed since we first recorded the hash. And like a truck seal, we can’t guarantee anything about tamper-evidence until we record the hash.

So what does this get us? If anyone asks us if an image has been tampered with, and we’ve previously hashed the image and recorded the hash, we can look through our computer, find the hash that we’ve recorded, and compare that old hash to the new hash of the current image. Hashing enables us to say “this image hasn’t changed since I saw it last,” and “this photo is the same as the one from the defendant’s Facebook page,” which is pretty helpful compared to having to visually look at the two images.

Using just a cryptographic hash has some flaws. For one thing, you could lie about the hash that you recorded earlier. “Yup, this [tampered-with] image hashes to the same thing I recorded!” just like the trucker could cut the truck seal and then sneakily replace the serial number, if they were the only one with the paperwork. So we need to record the hash of the image someplace else.

There’s a second flaw in only hashing: when we’re trying to investigate faked media, we usually care about time. For instance, consider this deepfake of Jim Carrey starring in The Shining. If we had two timestamped images and no other information, one of Jack Nicholoson starring in The Shining in 1980, and one starring Jim Carrey in 2019, which should we believe? We should probably believe the earlier one, because tampered images don’t go back in time. The original is by definition the oldest. This same logic was at work when Reuters proved that this image of Dutch farmers protesting was actually from 2019, not 2022, and therefore was not an accurate depiction of the protests in 2022.

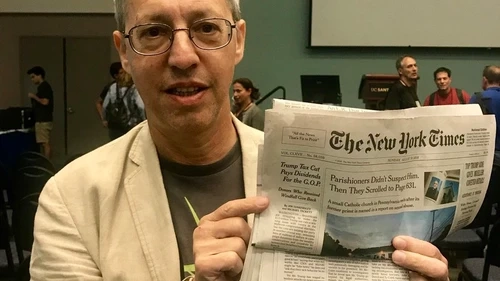

So, we need to hash images, and store the hashes somewhere else—somewhere else that records the time. Luckily, this is exactly what a timestamping service does. You can (very crudely) think of a timestamping service as a service that records the serial numbers of truck seals in the classified ads of the New York Times. Of course, no one does it—for obvious reasons—but let’s explore what it would achieve.

If you record the serial number of a truck seal in the classified ads, then you know the truck was sealed before the newspaper was printed. How does this apply to images? If you record the cryptographic hash of an image (the unique id that creates a pixel-perfect fingerprint of an image, that is so “random” it effectively can’t be known before the image exists) in a newspaper, then you know that the image existed before the newspaper was printed, and more than that, everyone else knows it too. It is very unlikely that the NYT is going to go around and collect everyone’s newspapers and replace them with a new version with a different hash value. And if they print newspapers with conflicting values, that can be proved afterwards with just two differing copies.

This is all great, but it’d be impossible to print hashes of all the images in the New York Times classified section—it’s just too many and it’d be a terrible read. Luckily, we already know how to fix this. Remember how a cryptographic hash is a digest or summary of something? We can go a step further, and hash the hashes to create a fingerprint of a collection of fingerprints. Then, the Timestamping Service just has one hash to record in the newspaper – the one that summarizes everything they’ve received in that time period.

A number of timestamping services exist, and one of them did actually record their hashes in the New York Times. But others like OpenTimestamps use blockchains instead as the place to record the hash “somewhere else.”

With cryptographic hashes of images stored with timestamping services, we can trust that an image existed at a certain point in time, without needing to trust the person telling us so. We can prove it to ourselves by taking a copy of the original file, hashing it, and checking that the output is the same as what was originally registered on the timestamping service. In a world where we are increasingly losing trust in institutions and may not know who to trust, especially as AI-generated propaganda becomes more prevalent, it is essential that we can prove it ourselves.

This is fantastic, but we’ve still got some problems. For example, if we are both investigating an image, how do we know it’s the same image we’re referencing, and not an altered version? And if you tell me that Reuters says the image is fake, how do I know that you’re not lying about what Reuters is saying? And what about privacy concerns?

Cryptography can help us with these problems too. Remember again how the cryptographic hash of an image was a unique id for it? We can use that id to refer to the image, rather than describing it by its filename and location. Using the cryptographic hash as the id is called content addressing, as in content addressed storage. Now we can know for sure whether we’re talking about the same image. And we can easily talk about one image being an edited version of another.

Using the cryptographic hash as the id helps us with privacy concerns as well. While content will always need to be removed for a variety of reasons, we can timestamp or talk about an image without ever revealing the image itself, because the cryptographic hash doesn’t reveal the underlying image.

And in the case of you telling me that Reuters says the image is fake, I can know that you’re telling me the truth even when you’re relaying second-hand information, if Reuters digitally signs the information. Using digital signatures makes it much easier to double check this information, as they allow us to “go to the source” even when we get the information second-hand. A digital signature is also different from an electronic signature, although they sound like the same thing. An electronic signature is the kind of image you make when you sign on the tablet at the grocery store. A digital signature is cryptography that lets you create something analogous to a branded seal, a seal that can only be created by you because you know the secret. And anyone who looks at the branded seal can confirm that you stamped it.

Digital signatures allow us to “stamp” information, in a way that cannot be forged. And unlike a paper signature, they can’t be cut and applied to a different document. This means that we can share information from sources, and if the sources have digitally signed that information, and we know that they said it, even if we don’t quite trust the person who relayed the information.

With these three tools (cryptographic hashes, timestamping services, and digital signatures), we as the general public can act as archivists for our future. By recording images as they exist now, and sharing digitally signed and timestamped information about the images, we can create a world in which we can ask the internet about an image. A world in which we can automatically get information about what is real from researchers we choose to trust, with information that we can independently verify.

As part of my work with Starling Lab, we built a new tool called Authenticated Attributes to enable researchers to do exactly that: share authenticated information from their investigations so that people have spaces to turn to, to find content that is verifiable, with attestations from those they trust. You can learn more about Authenticated Attributes in this video, and can find the demo code in this Github repository.

The solution to the problem of AI-generated images isn’t to try to detect the “tells” of AI. That’s a battle that’s lost every time AI improves. The solution is to use technology to record what’s real, and share it with each other for our future.

A version of this piece was originally published on the Starling Lab Dispatch.