An interesting proposal popped up on Twitter this week, from an organization called The Democracy Earth Foundation. They claim that they’ve solved the problem of internet voting, and they list UC Berkeley, TED, YCombinator, and the MIT Technology Review as sponsors. There are also some big names as donors and advisors. For instance, the co-founder of Reddit is listed as an advisor, and even Satoshi Nakamoto himself is listed as a donor, although I suspect that’s a cheeky joke.

Their solution, called Sovereign, is “a blockchain liquid democracy.” That’s a lot to unpack, so before we go into the specifics, let’s start with why this is such a hard problem.

Voting itself is difficult. Even in the real-world, we must defend voting against fraudulent voter registration, vote tampering, and corrupt officials. Even assuming that everyone is honest, the voting rules themselves can be faulty, as in when a third party candidate with little support is able to affect the outcome of the two main candidates.

Voting on the internet is even harder, because in addition to all the problems of voting, we have the problem of identifying voters. In real life, we use government IDs and prosecute voter fraud. However, on the internet…

But seriously, on the internet, it’s very easy to gain additional votes by creating additional accounts. So, any internet voting system needs to prevent the creation of multiple accounts in order to be fair. In computer science, these fake identities are known as Sybils (Douceur 2002). They create problems in reputation systems, voting, and in consensus algorithms like those in Bitcoin and Ethereum. Proof of work and proof of stake are two ways to solve this problem - proof of work by showing that you’ve done computation, and proof of stake by showing that you’ve staked tokens as collateral. However, they only work because the computation and the tokens can’t be faked. Unfortunately, both methods are costly and ill-suited for voting applications where prior wealth shouldn’t matter.

Proof of Identity

Sovereign, however, relies on what the Democracy Earth Foundation calls “Proof of Identity.” In short, they require every potential voter to take a video of themselves where the voter states their name and other information, then they have have other users compare two randomly selected videos and judge whether the two are the same or not.

Some basic assessment questions for Sovereign and other voting systems are:

- How easily can people create Sybils by subtly changing their appearance and fooling the human judges?

- How effective is this mechanism at identifying Sybils, if we assume they are easy to spot, and the judges are honest?

- What motivates the judges to do the comparison? What are the incentives for users of Sovereign?

- Can factions hijack the judging process and disenfranchise other factions, such as vulnerable minorities?

Let’s start with the video. Exactly how easy is it to present yourself as a different person and fool the human judges?

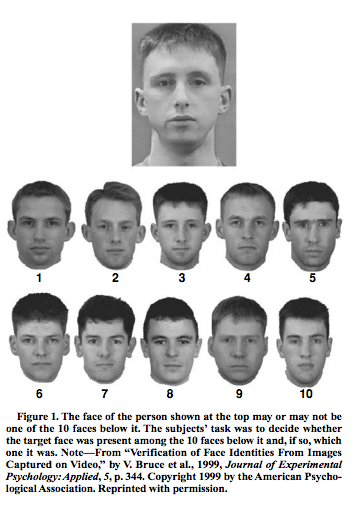

It turns out it’s probably easy to fool the judges. Studies show that human beings are very bad at matching unfamiliar faces, even from video. In one study, subjects who were judging based on photographs “picked the correct person on only about 70% of occasions. When the target was absent, subjects nevertheless chose someone on roughly 30% of occasions.”

Additionally, superficial features can be highly misleading. The Democracy Earth Foundation attempts to control this by commanding that people not wear “eyeglasses, hats, makeup or masks of any kind.” Leaving aside the fact that they have no way of enforcing a no-makeup policy (a successful use of makeup looks just like a natural face), it’s very easy for men to drastically change their look by just changing their facial hair. So, there’s at least several easy ways to create multiple identities that would pass by human judges.

Interestingly, human beings do significantly better at recognizing familiar faces than unfamiliar ones. Unfortunately, this leads to what is known as the Out-Group Homogeneity effect: people often find it more difficult to distinguish ethnic out-group members compared with ethnic in-group members. In Sovereign, this could easily lead to subconscious discrimination against other ethnic groups, since their videos are going to have a greater probability of seeming to be matches.

The Sovereign white paper states that “Anyone who ends up being voted as a replicant [aka Sybil] will see his or her granted votes useless.” Given the likelihood of false positives, it’s probable that Sovereign will often take away the right to vote from real people. Furthermore, if the errors aren’t random but instead caused by the out-group homogeneity effect, certain minority groups could be disenfranchised as a result.

Even if judges were 100% accurate at identifying fake accounts, how often would they even be looking at two videos of the same person? Turns out, it’d be pretty rare. For example, imagine there are n = 1000 videos of voters, and 2 match. (In other words, only one account has a duplicate.) Then the probability of even getting the matching videos in the same view is 1 out of (1000 choose 2), or 1/499500. This means that on average, the duplicate pair only comes up on a screen for someone to judge after 499,500 times. Let’s assume generously that every one of the 1000 people will watch and judge one set of the approximately 3 minute videos per day. On average, it will take more than a year to get a duplicate pair to compare, and let alone recognize them as the same person.

You might think that if there are more duplicates, they’ll be easier to find. That’s true. However, it only helps if the duplicates are all the same person. For instance, assume the 1000 voters are actually only 500 people, each with another fake identity. In this case, every single one of the voters is violating the rules and should be kicked out, but because a random voter and their fake identity will rarely be seen together, the judges will still only be able to stop the Sybil attacks 0.0002% of the time at best.

Computer Science, Game Theory and Mechanism Design

So far we’ve only covered the case in which the potential voters are dishonest. But what if the judges are dishonest? For instance, does a judge have an incentive to falsely say that two different people are actually duplicates?

The Democracy Earth Foundation says that the judges are “constantly incentivized to maintain legitimacy within the network in order to keep votes as a valuable asset: the success of the network on detecting replicants (duplicated identities) determines the scarcity of the vote token. The legitimacy of any democracy is based on the maintenance of a proper voter registry.”

This statement has two obvious problems. First, if votes are a valuable asset, there is just as much pressure to falsely accuse people of being replicants as there is to find actual ones. False accusations are very easy, and you can even disenfranchise your political opponents at the same time. The Democracy Earth Foundation’s argument doesn’t prove accuracy; instead it proves that there are huge incentives to lie. Furthermore, as long as no one finds out, the voting system stays legitimate in the public eye even with rampant fraud.

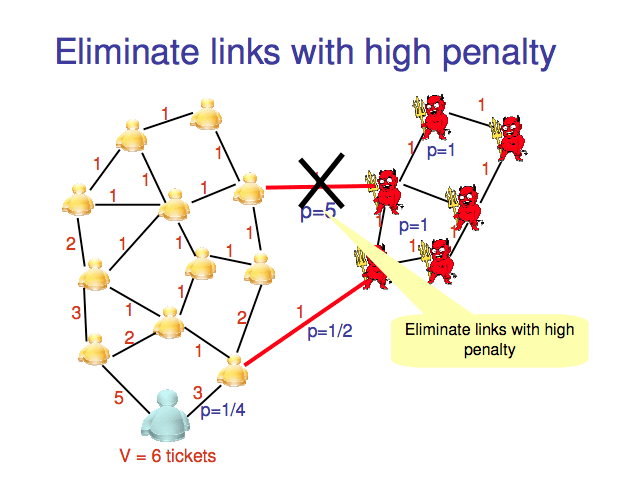

Thankfully, we already have a field of study that assesses the possibilities of bad actors: game theory. A general principle that applies here is that in order to prevent Sybils or in order to prevent collusion, you should ensure that creating a Sybil or colluding is more trouble than it’s worth. That’s a turn of phrase, but in this case I mean it literally: the expected cost of creating a Sybil should be greater than the benefit from having one.

It’s not clear that the Democracy Earth Foundation has done this kind of assessment at all. To be fair, looking at the problems of internet voting through the lens of game theory is relatively new and multidisciplinary.

There are a couple of different approaches. One is to use social choice, an area of game theory that uses axioms to assess voting rules. For instance, Vincent Conitzer, a Computer Science professor at Duke University, and others have been trying to find voting mechanisms that are false-name proof- mechanisms where no agent ever has an incentive to use fake accounts. Another approach is to use the social network and graph theory to create algorithms that detect and remove Sybils as in Sybil-Guard and SumUp. This is still an ongoing area of research, and very difficult to accomplish.

The bottom line is, any voting system using smart contracts has to be Sybil-resistant in order to be functional. Otherwise, there is no guarantee that the outcome of the vote is true to the wishes of the voters. Sovereign, the Democracy Earth Foundation’s voting system, isn’t Sybil-resistant, and furthermore, is hugely susceptible to fraud and abuse. Granted, the released publication is version “0.1,” but as published, Sovereign is simply not going to be able to work.

Originally published on Medium