Average reading time: 20 minutes.

A big thank you to Rick Dudley, Matthew Di Ferrante, and Kaliya Young for reviewing drafts of this post. All errors remain my own.

Soulbound Tokens (SBTs)

A recent paper by Glen Weyl, Puja Ohlhaver, and Vitalik Buterin outlines what they call “Decentralized Society” (DeSoc):

“a co-determined sociality, where Souls and communities come together bottom-up, as emergent properties of each other to co-create plural network goods and intelligences, at a range of scales.”

How do we accomplish this? According to the paper, with:

“accounts (or wallets) holding non-transferable (initially public) ‘soulbound’ tokens (SBTs) representing commitments, credentials, and affiliations.”

In this post, I’ll explain why “commitments, credentials, and affiliations” are better understood as claims. I’ll argue that claims should NEVER be public non-transferable tokens, and instead, claims should be statements digitally signed with Ethereum private keys, which I call signed claims.

I should point out—I’m not a knee-jerk tech critic. I’m a software engineer in the blockchain space who first owned bitcoin in 2013. I’ve got no bone to pick here, other than to ensure that we’re not building badly designed things that harm people.

Decentralized Society (DeSoc) Is An Admirable Goal

I’ll be honest: I find the paper’s definition of DeSoc confusingly vague. But I do agree with the aim generally. Here’s my attempt to steelman the argument:

Blockchains were created to bypass harmful intermediaries. Depending on your political views, you might focus on a particular subset: big banks, democracies captured by wealth inequality, authoritarian regimes, abusive record labels, nanny-state governments, theocratic laws, credit bureaus, and data-siphoning big tech like Facebook and Google.

The commonality in these “harmful intermediaries” is that they are trying to use their power to control you and to control your consensual interactions with other people. Maybe they use their wealth to bully you into submission, or they demand a huge cut of your business, or they eavesdrop and sell your data, or at the extreme, they jail or kill you for doing things they don’t like.

Because we’ve been trying to bypass harmful intermediaries, we’ve designed systems where intermediaries are unnecessary. Unfortunately, that means we’ve unintentionally optimized for the use cases in which you don’t know or can’t trust your counterparty–where trust and knowledge are replaced by cold, hard collateral. For instance, you can take out a loan, but rather than having your credit-worthiness evaluated, you escrow cryptocurrencies worth much more than the loan amount. This works, but it leaves out anyone who doesn’t own enough collateral, or can’t possibly afford the opportunity costs of locking it up. Social relationships and social information have been replaced with token ownership.

Don’t get me wrong. We’ve accomplished a lot. We can send money to the other side of the world as easily as in the same town. We can trade with other people in different countries securely, escrowing our assets according to the rules encoded in smart contract code and not according to a human being’s discretion. We’ve turned seemingly intractable problems of human misbehavior into solvable tech problems, and that’s really cool.

But in trying to fight harmful intermediaries, we’ve forgotten about helpful intermediaries and the benefits of human relationships in general. In real life, our relationships with other people sustain us. We trust them, because we have some idea of who they are. We’ve gathered this knowledge over time, based on what we’ve witnessed and what other people have told us.

What if we could bring these relationships and this kind of social information into Web3? If we could do this, we can potentially make it safe enough to partially trust or rely on someone else, because we can ask them or their community to provide evidence of their trustworthiness. However, we have to make sure we’re empowering ourselves and not the harmful intermediaries.

Claims

Let’s label this kind of social information a claim.

A claim is a statement that someone makes about themselves or someone else, which may or may not be true.

For example, if I need to find a pet sitter for my dog, I might ask my neighbors for a recommendation. When my neighbor tells me “Jane Smith pet sat for me, and she did a good job”, that’s a claim. It could be false. Maybe my neighbor is confused. Or maybe my neighbor gets kickbacks from Jane’s business. But, if I’ve assessed my neighbor as generally accurate and truthful, this is a very useful claim. I’d feel comfortable hiring Jane to take care of my dog.

In real life, people make claims all the time, and even if we’re not conscious of it, we constantly evaluate the probability that the claims are true. Our understanding of reality is built atop a network of claims to which we assign various weights.

It’s important to note that an essential part of evaluating a claim is evaluating who made the claim, their expertise, their experiences, and their motivations. The process of evaluating a claim is very subjective and context-dependent. People will naturally disagree about the trustworthiness of other people, and trust depends on the context. For instance, you might not trust your broke younger brother to repay a loan, but he might be a caring and fun babysitter for your five-year-old.

Because this analysis is so subjective and context-dependent, we should be extremely skeptical of any proposal to “calculate over” claims to get objective results.

The Goal: Introducing claims to Web3

To continue steelmanning, I would say the aim of the paper is to introduce claims to Web3. While not new1, this is a fantastic goal, in theory. The more fraud and misbehavior we’re able to prevent, the more we can safely cooperate with each other without having to rely on the harmful intermediaries that we’re trying to escape.

The question then, is, how do we do it? And how can we do it without further enabling the harmful intermediaries by giving them more data and more control over us?

What Are Soulbound Tokens?

What the heck are soulbound tokens? Even after reading the paper, I still don’t know.

You would think that “publicly visible, non-transferable (but possibly revocable-by-the-issuer) tokens” means on-chain tokens, but Glen Weyl has told me publicly that “token does not mean ‘on chain.’”

Snark aside, I hope I’ve conveyed the very real difficulty of identifying what the authors mean by “soulbound tokens.” If this is the official opinion of the authors, then “soulbound tokens” is a very bad, misleading name.

Additionally, combining a number of very different use cases under the name “soulbound tokens” has created significant confusion. For example, EIP-4973 is a current proposal in the Ethereum community to implement “soulbound tokens.” It was originally motivated by a desire to implement Harberger taxes on token transfers. The authors of EIP-4973 have published a blog post to clarify a number of misconceptions. One of their recent additions explains that they agree that claims as defined “make no sense to be stored on-chain”. They say that while there might be some use cases, generally “ABTs shouldn’t be used as credentials on-chain.” They explain further: “our focus is on creating new type of ownership experience” 2.

For the purposes of making progress, I’d love to get clarification on the following:

- Are soulbound tokens actually tokens? (Let’s define tokens as assets mapped to addresses, as recorded in a smart contract on a blockchain.)

- Are soulbound tokens on the Ethereum mainnet? If not, where are they?

- Are soulbound tokens ERC-721 tokens, but non-transferable and potentially revocable?

- Is EIP-4973 what you envisioned?

- What exact data is stored on-chain vs. off-chain? The paper is inconsistent.

- How is the person making the claim represented? In other words, if it’s a token, how do we know who minted the token? How legible is this?

- Does the person making the SBT sign any claim with a private key?

The thing that I really want to prevent is representing claims as tokens on blockchains. Maybe we’re already agreed that we should never do this. If so, that’s great and my work here is done. But, the paper mentions public, non-transferable tokens on public blockchains as a place to start, and the people working on implementing soulbound tokens are building exactly that. So, at least right now, there’s still a significant need to explain why using tokens to represent claims is a terrible idea.

Signed Claims: Claims with Digital Signatures

Before we get to why using tokens to represent claims is a terrible idea, I want to explain a very concrete alternative: claims that are digitally signed with Ethereum private keys. Let’s call them signed claims.

If you’ve made an Ethereum transaction, you’ve created a digital signature. Your account is a keypair: a public key (which your address is derived from) and a private key. Your private key was used to sign the transaction3.

When you submit a signed transaction to Ethereum, the signature accomplishes two things: it authenticates your transaction in an unforgeable way, and it guarantees that no one was able to change the contents of the transaction (data integrity).

But you can sign more than transactions. You can use your private key to sign any kind of data (documents, images, messages, song files, etc.).

Let’s examine how digital signatures would work for claims. If my neighbor wants to claim that Jane is a good pet sitter, she can simply say so in a message, and then sign the message. For instance:

“My neighbor, Jane Smith

(0x00931d500eea10DcBD418ea2eBdE3C1DCa86564b)

has been a good pet sitter over the past

5 years. By signing, I

(0xcf9F9021e2594394b2A9d6115e9cc682d761368f)

attest to this statement.”Signing a message like this produces a signature, a 65 byte4 piece of data, such as:

If someone sends you a claim like the one above, you can use public key cryptography5 to verify6 it. The content of the claim might still be false, but as long as my neighbor’s private key wasn’t stolen, I know that this is exactly what my neighbor actually claimed.

Importantly, by default, signatures are local on your computer. You don’t need to submit them to a blockchain. Unless you share it, no one else has access to it. Because a signature is just data, you can store it and share it in the same ways that you share other data - sending it in a text message, sending it in an email, putting it on a website, storing it in your Google Drive, etc.

(You might have noticed that the above message about Jane Smith is human-readable, but not very machine-readable. In order to have claims that machines can parse easily and that interoperate with code outside Ethereum, it’s beneficial to use standard data formats, like Verifiable Credentials7. Also, the people who work on Verifiable Credentials have thought about claims a lot more than the rest of us have. 😉)

Why Using Tokens for Claims Is a Terrible Idea

As I said earlier, claims may be false, so they need to be evaluated. To evaluate a claim, you need to know:

- Who is the claim about? (the SUBJECT)

- What is the claim about? (the CONTENTS)

- Who made the claim? (the ISSUER, aka the signer of a signed claim)

- What information do I have about the issuer? (ISSUER REPUTATION)

(Evaluating a claim might require you to recursively traverse a graph of claims in order to assess the issuer’s reputation. For example, if I’m assessing my neighbor’s claim about Jane, I might ask what other people have said about my neighbor. I might also ask if those people are trustworthy, and so on and so forth.)

Tokens for claims are a terrible idea because they provide exactly the wrong affordances:

- Evaluating a claim requires knowing who the issuer is, but the current tooling makes it difficult to find out who created the token.

- Claims are deeply subjective, but tokens attached to an account wrongly look objective.

- Claims, like any information about people, should be as private as possible, but tokens on a public blockchain are public.

- Claims should be cheap to make, but non-fungible tokens cost around $50 to mint on Ethereum at the time of this post.

- Claims don’t need to be in an ordered transaction log and don’t need decentralized consensus with permissionless entry, but blockchains are slow and costly specifically because they provide these features.

- Claims can be false, but many people don’t understand that blockchains can contain false information.

Let’s take a look at each of these.

Knowing the issuer of a claim: With a token, how do I know who made the claim? Presumably it’d be the person who minted the token, but Ethereum doesn’t have a standard way of recording that information. In EIP-4973, they suggest indexers use the transaction-level from field and get the data from event logs which aren’t even accessible by smart contracts. Compare this to a signed claim, where it is very clear which account made the statement, and anyone, including smart contracts, can verify the signature.

Tokens wrongly look objective: If someone makes an untrue claim about me, I can say they are wrong, and that people should ignore it. People might not believe me, but at least they understand that claims about other people might be untrue. But if someone can make a token “in my account” that shows up anytime anyone looks up my address, it’s much harder to ignore. It’s deeply connected to my account in a way that doesn’t allow other people to decide whether to pay attention to it or not. Furthermore, trying to fix this by allowing people to choose what tokens show up for their own address would be a very bad decision. For example, the Me Too movement would have been stopped completely if someone like Harvey Weinstein could have hidden negative claims about himself8. Because claims have to be subjectively evaluated, allowing other people to make claims that seem objective to outside parties is simply the wrong design. Signed claims do a better job than tokens in indicating subjectivity because they aren’t attached automatically to the subject, and their truth depends on the trustworthiness of the issuer.

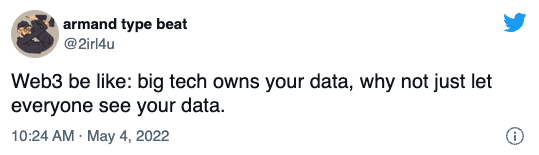

Tokens are public: Claims should be as private as possible, but tokens on a public blockchain are public. Claims made in the clear on a public blockchain are tremendously dangerous: authoritarian regimes and data-siphoning big tech would LOVE them. The paper admits this: “without explicit measures taken to protect privacy, the “naive” vision of simply putting all SBTs on-chain may well make too much information public for many applications.” However, after admitting the problems of public tokens, the paper still advocates for it as a good place to start, saying “most of our applications depend on some level of publicity.” And again, this is what people are actively building right now.

Tokens are expensive to create: Signed claims are completely free to create and verify. It’s just running a little bit of code. Whereas, minting an NFT on the Ethereum mainnet right now costs about $50. Imagine every claim costing $50.

Decentralized blockchains probably aren’t the right place to put claims9: Claims are just one entity talking about another entity. With signed claims, we can use cryptography to verify that the issuer made the claim. Having multiple nodes verify the same signature doesn’t give you anything.

Now, it would be extremely helpful to ask other people whether they think the claim is valid, but blockchain consensus doesn’t do anything like that. The way a blockchain works is “garbage in, garbage out”, unless it’s something that the blockchain code specifically checks, like whether a transaction correctly authorizes a token transfer. (And even then, the consensus is for adding things to the ordered transaction log, not for verifying things you can verify independently with cryptography.) Using decentralized blockchain technology for claims is unnecessary and promotes a naive understanding of what blockchains are good for.

People still don’t understand blockchains can contain incorrect information: It pains me to admit this, but I suspect that a non-trivial number of blockchain retail investors think that information on a blockchain is more credible because of “consensus”. In the same way that they don’t realize that you can mint the same image as an NFT many times, and nothing about a blockchain stops you, they don’t realize that you can put whatever data you want on a blockchain and if it’s not something that a blockchain is specifically programmed to validate and reject, it will be added to the chain even if the data is false. Tokens on a blockchain send the wrong message to the average person about the person’s duty to evaluate claims for themselves.

Conclusion

Again, my main goal in writing this post is not to evaluate the paper as a paper, but to say that implementing claims as tokens on blockchains is a bad idea and should be stopped. If we can agree on that, then I’ve accomplished my goal.

Notes

- The W3C Verifiable Claims Group has been working on the issue for years and has a number of Web3 companies represented.↩

- This section has been edited because the original text further confused the goal of EIP-4973, which does not intend to implement claims or credentials.↩

- MyCrypto has an excellent write up of how digital signatures are used in Ethereum.↩

- There’s also a compact version↩

- Metamask has some excellent libraries and endpoints for signing messages and verifying signatures.↩

- To verify, you need to know the message that was signed, the signature, and the public key or address of the signer.↩

- For more on verifiable credentials, I recommend a great talk by Evin McMullen: Decentralized Identity and Verifiable Credentials.↩

- The ability to forward a signed claim means that for use cases such as the Me Too movement, people making claims should only make signed claims under their own identity when they are comfortable with the information potentially leaking. Some alternatives are: having someone else sign on your behalf (“someone who will remain nameless has told me x”), making signed claims under a different identity, and making unsigned claims. Ring signatures may also be helpful. With ring signatures, I can prove to you that someone from this group made a claim but without revealing which exact person did.↩

- Some use cases would benefit from knowing that a claim was created at a certain point in time, and was not backdated. For that, I would suggest publishing the hash to a cheap, centralized timestamping service which uses hash pointers rather than a full-blown decentralized blockchain. More on this to come.↩